Author: Alex Shipps|MIT CSAIL

Date Published: Publication Date:October 16, 2024

A new method can train a neural network to sort corrupted data while anticipating next steps. It can make flexible plans for robots, generate high-quality video, and help AI agents navigate digital environments.

Flexible Sequence Models Bridge Gap between AI Vision and Robotics

In the current artificial intelligence (AI) landscape, sequence models have gained significant attention for their ability to analyze data and predict future outcomes. These models are utilized in various applications, such as next-token prediction, where algorithms like ChatGPT anticipate each word in a sequence to form answers to users' queries. Additionally, full-sequence diffusion models, similar to Sora, can transform words into visually stunning images by iteratively "denoising" entire video sequences.

Researchers at the Massachusetts Institute of Technology's (MIT) Computer Science and Artificial Intelligence Laboratory (CSAIL) have proposed an innovative approach to enhance sequence denoising, making it more flexible. This change in training scheme is designed to address limitations in current models used for applications like computer vision and robotics.

The proposed method, called "Diffusion Forcing," aims to combine the strengths of next-token and full-sequence diffusion models. By doing so, Diffusion Forcing can produce variable-length sequences while also enabling long-horizon planning – a significant advantage over traditional methods. This approach has the potential to improve AI-generated content and decision-making capabilities in robotics.

Diffusion Forcing trains neural networks to predict masked tokens from unmasked ones, similar to traditional teacher forcing techniques but with a more flexible and adaptable approach. By sorting through noisy data and predicting future steps, this method can aid robots in ignoring distractions and completing tasks efficiently. It also generates stable video sequences and guides AI agents through digital mazes.

Lead author Boyuan Chen notes that sequence models typically condition on known past information and predict the unknown future, a form of binary masking. However, with Diffusion Forcing, different levels of noise are added to each token, effectively creating fractional masking. This allows the system to "unmask" tokens and diffuse sequences in the near future at a lower noise level.

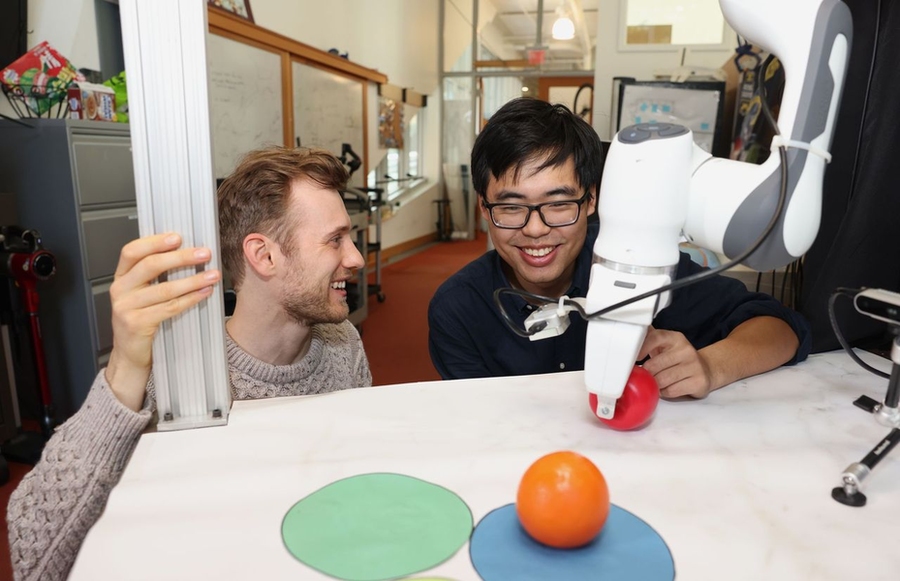

Experimental results demonstrate that Diffusion Forcing outperforms baseline models in various tasks, such as robot manipulation and video generation. In one example, it helped train a robotic arm to swap two toy fruits across three circular mats despite starting from random positions and encountering distractions. The method also generated higher-quality videos than comparable baselines when trained on Minecraft gameplay.

Diffusion Forcing has the potential to serve as a powerful backbone for a "world model," an AI system that can simulate real-world dynamics by training on billions of internet videos. This could enable robots to perform novel tasks based on their surroundings, such as opening doors without explicit training. The researchers are currently working to scale up the method and explore its applications in robotics and beyond.

Senior author Vincent Sitzmann emphasizes the significance of bridging video generation and robotics with Diffusion Forcing. He envisions a future where robots can help in everyday life by leveraging knowledge from videos on the internet, leading to exciting research challenges like teaching robots to imitate humans through observation.

The study was supported by various organizations, including the U.S. National Science Foundation, Singapore Defence Science and Technology Agency, Intelligence Advanced Research Projects Activity, and Amazon Science Hub. The researchers will present their findings at NeurIPS in December.

caption: Diffusion Forcing Video: MIT CSAIL

Attribution: Reprinted with permission of MIT News.,https://news.mit.edu/2024/combining-next-token-prediction-video-diffusion-computer-vision-robotics-1016

Author: Alex Shipps|MIT CSAIL

Date Published: Publication Date:October 16, 2024

A new method can train a neural network to sort corrupted data while anticipating next steps. It can make flexible plans for robots, generate high-quality video, and help AI agents navigate digital environments.

Flexible Sequence Models Bridge Gap between AI Vision and Robotics

In the current artificial intelligence (AI) landscape, sequence models have gained significant attention for their ability to analyze data and predict future outcomes. These models are utilized in various applications, such as next-token prediction, where algorithms like ChatGPT anticipate each word in a sequence to form answers to users' queries. Additionally, full-sequence diffusion models, similar to Sora, can transform words into visually stunning images by iteratively "denoising" entire video sequences.

Researchers at the Massachusetts Institute of Technology's (MIT) Computer Science and Artificial Intelligence Laboratory (CSAIL) have proposed an innovative approach to enhance sequence denoising, making it more flexible. This change in training scheme is designed to address limitations in current models used for applications like computer vision and robotics.

The proposed method, called "Diffusion Forcing," aims to combine the strengths of next-token and full-sequence diffusion models. By doing so, Diffusion Forcing can produce variable-length sequences while also enabling long-horizon planning – a significant advantage over traditional methods. This approach has the potential to improve AI-generated content and decision-making capabilities in robotics.

Diffusion Forcing trains neural networks to predict masked tokens from unmasked ones, similar to traditional teacher forcing techniques but with a more flexible and adaptable approach. By sorting through noisy data and predicting future steps, this method can aid robots in ignoring distractions and completing tasks efficiently. It also generates stable video sequences and guides AI agents through digital mazes.

Lead author Boyuan Chen notes that sequence models typically condition on known past information and predict the unknown future, a form of binary masking. However, with Diffusion Forcing, different levels of noise are added to each token, effectively creating fractional masking. This allows the system to "unmask" tokens and diffuse sequences in the near future at a lower noise level.

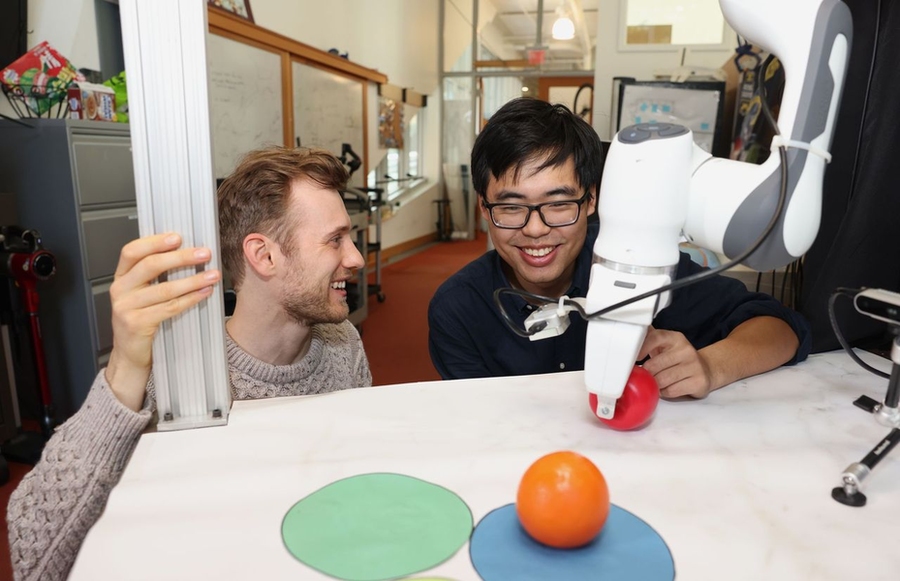

Experimental results demonstrate that Diffusion Forcing outperforms baseline models in various tasks, such as robot manipulation and video generation. In one example, it helped train a robotic arm to swap two toy fruits across three circular mats despite starting from random positions and encountering distractions. The method also generated higher-quality videos than comparable baselines when trained on Minecraft gameplay.

Diffusion Forcing has the potential to serve as a powerful backbone for a "world model," an AI system that can simulate real-world dynamics by training on billions of internet videos. This could enable robots to perform novel tasks based on their surroundings, such as opening doors without explicit training. The researchers are currently working to scale up the method and explore its applications in robotics and beyond.

Senior author Vincent Sitzmann emphasizes the significance of bridging video generation and robotics with Diffusion Forcing. He envisions a future where robots can help in everyday life by leveraging knowledge from videos on the internet, leading to exciting research challenges like teaching robots to imitate humans through observation.

The study was supported by various organizations, including the U.S. National Science Foundation, Singapore Defence Science and Technology Agency, Intelligence Advanced Research Projects Activity, and Amazon Science Hub. The researchers will present their findings at NeurIPS in December.

caption: Diffusion Forcing Video: MIT CSAIL

Attribution: Reprinted with permission of MIT News.,https://news.mit.edu/2024/combining-next-token-prediction-video-diffusion-computer-vision-robotics-1016